|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

自回歸(AR)模型在語言生成方面取得了重大進步,並越來越多地探索圖像綜合。

Autoregressive (AR) models have achieved remarkable progress in language generation and are increasingly being explored for image synthesis. However, scaling AR models to high-resolution images presents a persistent challenge. Unlike text, which requires relatively few tokens, high-resolution images necessitate thousands of tokens, leading to a quadratic growth in computational cost. As a result, most AR-based multimodal models are constrained to low or medium resolutions, limiting their utility for detailed image generation. While diffusion models have shown promising results at high resolutions, they come with their own limitations, including complex sampling procedures and slower inference. Addressing the token efficiency bottleneck in AR models remains a crucial open problem for enabling scalable and practical high-resolution image synthesis.

自回歸(AR)模型在語言產生方面取得了顯著進步,並越來越多地探索圖像合成。但是,將AR模型縮放到高分辨率圖像帶來了持續的挑戰。與文本相對較少的代幣不同,高分辨率圖像需要數千個令牌,從而導致計算成本的二次增長。結果,大多數基於AR的多模式模型都限制為低或中等分辨率,從而限制了其效用以詳細的圖像生成。儘管擴散模型在高分辨率下顯示出令人鼓舞的結果,但它們具有自己的局限性,包括複雜的採樣程序和較慢的推理。在AR模型中解決令牌效率瓶頸仍然是一個至關重要的開放問題,可以實現可擴展和實用的高分辨率圖像合成。

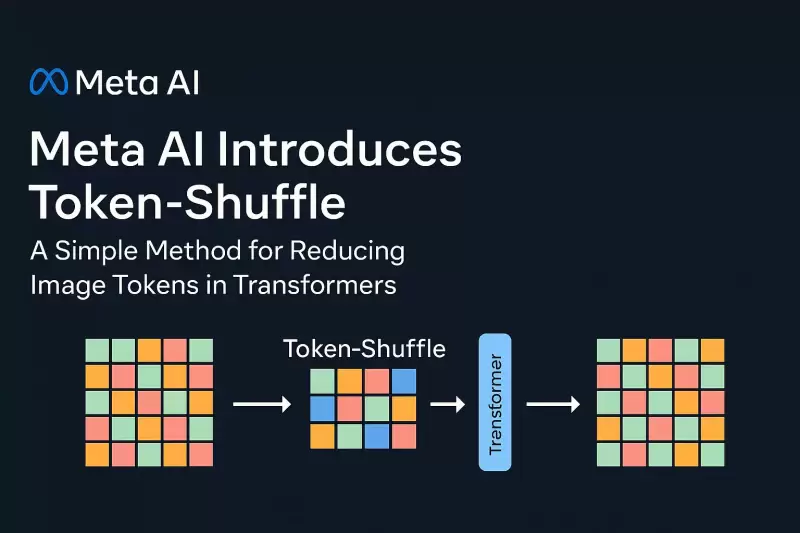

In a new contribution by Meta AI, researchers introduce Token-Shuffle, a method designed to reduce the number of image tokens processed by Transformers without altering the fundamental next-token prediction reach. The key insight underpinning Token-Shuffle is the recognition of dimensional redundancy in visual vocablies used by multimodal large language models (MLLMs). Visual tokens, typically derived from vecor quantization (VQ) models, occupy high-dimensional spaces but carry a lower intrinsic information density compared to text tokens. Token-Shuffle exploits this property by merging spatially local visual tokens along the channel dimension before Transformer processing and subsequently restoring the original spatial structure after inference. This token fusion mechanism allows AR models to handle higher resolutions with significantly reduced computational cost while maintaining visual fidelity.

在Meta AI的新貢獻中,研究人員介紹了令牌shuffle,該方法旨在減少變壓器處理的圖像令牌數量而不改變基本的下一步預測範圍。支撐令牌的主要見解是對多模式大語言模型(MLLMS)使用的視覺聲明中的尺寸冗餘的識別。通常來自Vecor量化(VQ)模型的視覺令牌,佔據高維空間,但與文本令牌相比,內在信息密度較低。令牌shuffle通過在變壓器處理前沿通道維度合併空間局部視覺令牌來利用此屬性,然後在推斷後恢復原始的空間結構。這種令牌融合機制使AR模型可以在保持視覺保真度的同時,以顯著降低的計算成本來處理更高的分辨率。

Token-Shuffle consists of two operations: token-shuffle and token-unshuffle. During input preparation, spatially neighboring tokens are merged using an MLP to form a compressed token that preserves essential local information. For a shuffle window size sss, the number of tokens is reduced by a factor of s2s^2s2, leading to a substantial reduction in Transformer FLOPs. After the Transformer layers, the token-unshuffle operation reconstructs the original spatial arrangement, again assisted by lightweight MLPs.

令牌換件由兩個操作組成:令牌shuffle和doken-unshuffle。在輸入準備過程中,使用MLP合併空間相鄰的令牌,以形成保留基本局部信息的壓縮令牌。對於散裝窗口尺寸的SSS,令牌的數量減少了S2S^2S2,從而大大降低了變壓器拖鞋。在變壓器層之後,令牌固定操作重建了原始的空間佈置,並在輕量級MLP的協助下。

By compressing token sequences during Transformer computation, Token-Shuffle enables the efficient generation of high-resolution images, including those at 2048×2048 resolution. Importantly, this approach does not require modifications to the Transformer architecture itself, nor does it introduce auxiliary loss functions or pretraining of additional encoders.

通過在變壓器計算過程中壓縮令牌序列,令牌轉換可以有效地生成高分辨率圖像,包括2048×2048分辨率的圖像。重要的是,這種方法不需要對變壓器體系結構本身進行修改,也不需要引入輔助損失功能或對其他編碼器進行預處理。

Moreover, the method integrates a classifier-free guidance (CFG) scheduler specifically adapted for autoregressive generation. Rather than applying a fixed guidance scale across all tokens, the scheduler progressively adjusts guidance strength, minimizing early token artifacts and improving text-image alignment.

此外,該方法集成了專門適用於自回歸生成的無分類器指導(CFG)調度程序。調度程序並沒有在所有代幣中應用固定的指導量表,而是逐步調整指導強度,最大程度地減少令牌文物並改善文本圖像對齊。

Token-Shuffle was evaluated on two major benchmarks: GenAI-Bench and GenEval. On GenAI-Bench, using a 2.7B parameter LLaMA-based model, Token-Shuffle achieved a VQAScore of 0.77 on “hard” prompts, outperforming other autoregressive models such as LlamaGen by a margin of +0.18 and diffusion models like LDM by +0.15. In the GenEval benchmark, it attained an overall score of 0.62, setting a new baseline for AR models operating in the discrete token regime.

在兩個主要基準:Genai-Bench和Geneval上評估了令牌shuffle。在Genai-Bench上,使用基於2.7B參數Llama的模型,令牌shuffle在“硬”提示上達到了0.77的VQASCORE,超過了其他自回歸模型,例如+0.18的餘量,lamagen的差距為+0.18,而LDM(如LDM)的差距為+0.15。在Geneval基準測試中,它的總體得分為0.62,為在離散令牌制度中運行的AR模型設定了新的基線。

Large-scale human evaluation on two benchmarks, GenAI-Bench and GenEval

對兩個基準的大規模評估Genai-Bench和Geneval

Furthermore, Token-Shuffle was subjected to large-scale human evaluation on two benchmarks, GenAI-Bench and GenEval. The results indicated that compared to LlamaGen, Lumina-mGPT, and diffusion baselines, Token-Shuffle showed improved alignment with textual prompts, reduced visual flaws, and higher subjective image quality in most cases. However, minor degradation in logical consistency was observed relative to diffusion models, suggesting avenues for further refinement.

此外,令牌shuffle在兩個基準(Genai-Bench and Geneval)上進行了大規模的人類評估。結果表明,與Lamagen,Lumina-MGPT和擴散基線相比,令牌shuffle在大多數情況下顯示出與文本提示,視覺缺陷減少以及更高的主觀圖像質量相比的改善。然而,相對於擴散模型,觀察到邏輯一致性的微小降解,這表明了進一步完善的途徑。

In terms of visual quality, Token-Shuffle demonstrated the capability to produce detailed and coherent 1024×1024 and 2048×2048 images. Ablation studies revealed that smaller shuffle window sizes (e.g., 2×2) offered the best trade-off between computational efficiency and output quality. Larger window sizes provided additional speedups but introduced minor losses in fine-grained detail.

在視覺質量方面,令牌shuffle證明了產生詳細且相干的1024×1024和2048×2048圖像的能力。消融研究表明,較小的洗牌窗口尺寸(例如2×2)在計算效率和產出質量之間提供了最佳的權衡。較大的窗戶尺寸提供了額外的加速度,但以細粒度的細節引入了輕微的損失。

All in all, the researchers present a simple yet effective method to address the scalability limitations of autoregressive image generation. By merging spatially local visual tokens during the Transformer computation, their approach reduces the computational complexity without altering the fundamental next-token prediction step. This integration of spatial compression with standard AR generation is compatible with existing multimodal generation frameworks, enabling the efficient generation of high-resolution images. The experimental results highlight the potential of Token-Shuffle to push AR models beyond prior resolution limits, making high-fidelity, high-resolution generation more practical and accessible.

總而言之,研究人員提出了一種簡單而有效的方法,可以解決自回歸圖像產生的可擴展性局限性。通過在變壓器計算過程中合併空間局部視覺令牌,它們的方法可降低計算複雜性,而不會改變基本的下一步預測步驟。空間壓縮與標準AR生成的這種集成與現有的多模式生成框架兼容,從而有效地生成了高分辨率圖像。實驗結果突顯了令牌墊圈將AR模型推向先前分辨率限制的潛力,從而使高保真,高分辨率的生成更加實用和易於使用。

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

- Coinbase,以太坊和科學代幣:加密的新時代?

- 2025-08-01 06:01:52

- Coinbase包含研究保護,以太坊認為交易的主導地位,並且自我顧客獲得了牽引力。這對加密貨幣的未來意味著什麼?

-

- 拜占庭硬幣吊墜:精英證據閃閃發光

- 2025-08-01 05:57:51

- 罕見的六世紀偽拜津丁金硬幣吊墜在Thaxted附近發現,揭示了以前在中世紀早期的埃塞克斯(Essex)中未知的精英存在。

-

-

- 比特幣的拉撒路錢包:休眠運動覺醒!

- 2025-08-01 05:38:16

- 15年後,早期的比特幣礦工錢包攪動,將數百萬美元移動。這種休眠運動對市場意味著什麼?查出!

-

- 2025年的模因硬幣:北極Pablo領導著包裝

- 2025-08-01 05:30:04

- 在2025年探索模因硬幣景觀,北極Pablo硬幣以其溫和的標記和有前途的投資回報率引起了指控。

-

- 在不斷增長的機構支持中,Solana ETF應用激增

- 2025-08-01 05:24:00

- Solana ETF應用程序正在向SEC充斥機構的利益飆升。 Solana會成為闖入主流投資界的下一個加密貨幣嗎?

-

- SEC,加密貨幣和證券:導航新的邊界

- 2025-08-01 04:04:34

- Project Crypto:SEC對數字資產的證券規則進行了現代化,解決了加密貨幣融入金融。這項大膽的主動性會推動美國加密領導嗎?

-

-

- Ondo Finance,RWA令牌化和白宮報告:數字金融的新時代

- 2025-08-01 04:00:25

- 在白宮的報告中強調,探索ondo Finance在RWA令牌化中的作用及其對金融的未來的影響。