|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

自回归(AR)模型在语言生成方面取得了重大进步,并越来越多地探索图像综合。

Autoregressive (AR) models have achieved remarkable progress in language generation and are increasingly being explored for image synthesis. However, scaling AR models to high-resolution images presents a persistent challenge. Unlike text, which requires relatively few tokens, high-resolution images necessitate thousands of tokens, leading to a quadratic growth in computational cost. As a result, most AR-based multimodal models are constrained to low or medium resolutions, limiting their utility for detailed image generation. While diffusion models have shown promising results at high resolutions, they come with their own limitations, including complex sampling procedures and slower inference. Addressing the token efficiency bottleneck in AR models remains a crucial open problem for enabling scalable and practical high-resolution image synthesis.

自回归(AR)模型在语言产生方面取得了显着进步,并越来越多地探索图像合成。但是,将AR模型缩放到高分辨率图像带来了持续的挑战。与文本相对较少的代币不同,高分辨率图像需要数千个令牌,从而导致计算成本的二次增长。结果,大多数基于AR的多模式模型都限制为低或中等分辨率,从而限制了其效用以详细的图像生成。尽管扩散模型在高分辨率下显示出令人鼓舞的结果,但它们具有自己的局限性,包括复杂的采样程序和较慢的推理。在AR模型中解决令牌效率瓶颈仍然是一个至关重要的开放问题,可以实现可扩展和实用的高分辨率图像合成。

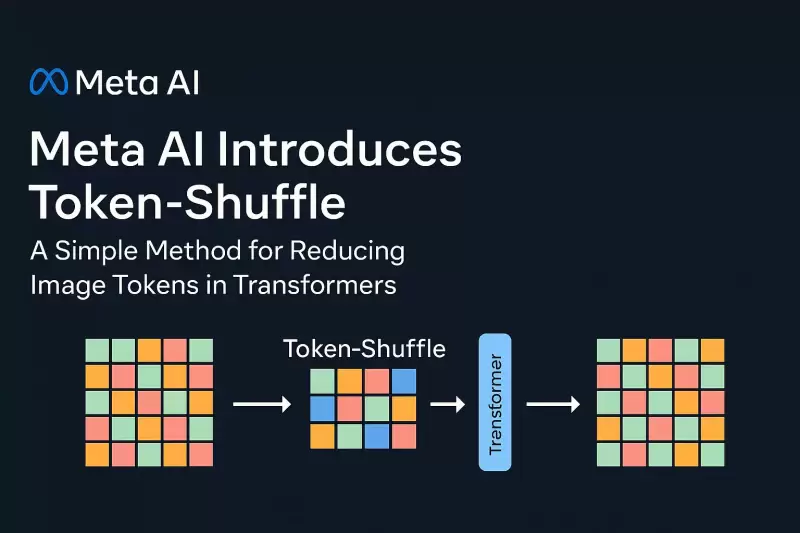

In a new contribution by Meta AI, researchers introduce Token-Shuffle, a method designed to reduce the number of image tokens processed by Transformers without altering the fundamental next-token prediction reach. The key insight underpinning Token-Shuffle is the recognition of dimensional redundancy in visual vocablies used by multimodal large language models (MLLMs). Visual tokens, typically derived from vecor quantization (VQ) models, occupy high-dimensional spaces but carry a lower intrinsic information density compared to text tokens. Token-Shuffle exploits this property by merging spatially local visual tokens along the channel dimension before Transformer processing and subsequently restoring the original spatial structure after inference. This token fusion mechanism allows AR models to handle higher resolutions with significantly reduced computational cost while maintaining visual fidelity.

在Meta AI的新贡献中,研究人员介绍了令牌shuffle,该方法旨在减少变压器处理的图像令牌数量而不改变基本的下一步预测范围。支撑令牌的主要见解是对多模式大语言模型(MLLMS)使用的视觉声明中的尺寸冗余的识别。通常来自Vecor量化(VQ)模型的视觉令牌,占据高维空间,但与文本令牌相比,内在信息密度较低。令牌shuffle通过在变压器处理前沿通道维度合并空间局部视觉令牌来利用此属性,然后在推断后恢复原始的空间结构。这种令牌融合机制使AR模型可以在保持视觉保真度的同时,以显着降低的计算成本来处理更高的分辨率。

Token-Shuffle consists of two operations: token-shuffle and token-unshuffle. During input preparation, spatially neighboring tokens are merged using an MLP to form a compressed token that preserves essential local information. For a shuffle window size sss, the number of tokens is reduced by a factor of s2s^2s2, leading to a substantial reduction in Transformer FLOPs. After the Transformer layers, the token-unshuffle operation reconstructs the original spatial arrangement, again assisted by lightweight MLPs.

令牌换件由两个操作组成:令牌shuffle和doken-unshuffle。在输入准备过程中,使用MLP合并空间相邻的令牌,以形成保留基本局部信息的压缩令牌。对于散装窗口尺寸的SSS,令牌的数量减少了S2S^2S2,从而大大降低了变压器拖鞋。在变压器层之后,令牌固定操作重建了原始的空间布置,并在轻量级MLP的协助下。

By compressing token sequences during Transformer computation, Token-Shuffle enables the efficient generation of high-resolution images, including those at 2048×2048 resolution. Importantly, this approach does not require modifications to the Transformer architecture itself, nor does it introduce auxiliary loss functions or pretraining of additional encoders.

通过在变压器计算过程中压缩令牌序列,令牌转换可以有效地生成高分辨率图像,包括2048×2048分辨率的图像。重要的是,这种方法不需要对变压器体系结构本身进行修改,也不需要引入辅助损失功能或对其他编码器进行预处理。

Moreover, the method integrates a classifier-free guidance (CFG) scheduler specifically adapted for autoregressive generation. Rather than applying a fixed guidance scale across all tokens, the scheduler progressively adjusts guidance strength, minimizing early token artifacts and improving text-image alignment.

此外,该方法集成了专门适用于自回归生成的无分类器指导(CFG)调度程序。调度程序并没有在所有代币中应用固定的指导量表,而是逐步调整指导强度,最大程度地减少令牌文物并改善文本图像对齐。

Token-Shuffle was evaluated on two major benchmarks: GenAI-Bench and GenEval. On GenAI-Bench, using a 2.7B parameter LLaMA-based model, Token-Shuffle achieved a VQAScore of 0.77 on “hard” prompts, outperforming other autoregressive models such as LlamaGen by a margin of +0.18 and diffusion models like LDM by +0.15. In the GenEval benchmark, it attained an overall score of 0.62, setting a new baseline for AR models operating in the discrete token regime.

在两个主要基准:Genai-Bench和Geneval上评估了令牌shuffle。在Genai-Bench上,使用基于2.7B参数Llama的模型,令牌shuffle在“硬”提示上达到了0.77的VQASCORE,超过了其他自回归模型,例如+0.18的余量,lamagen的差距为+0.18,而LDM(如LDM)的差距为+0.15。在Geneval基准测试中,它的总体得分为0.62,为在离散令牌制度中运行的AR模型设定了新的基线。

Large-scale human evaluation on two benchmarks, GenAI-Bench and GenEval

对两个基准的大规模评估Genai-Bench和Geneval

Furthermore, Token-Shuffle was subjected to large-scale human evaluation on two benchmarks, GenAI-Bench and GenEval. The results indicated that compared to LlamaGen, Lumina-mGPT, and diffusion baselines, Token-Shuffle showed improved alignment with textual prompts, reduced visual flaws, and higher subjective image quality in most cases. However, minor degradation in logical consistency was observed relative to diffusion models, suggesting avenues for further refinement.

此外,令牌shuffle在两个基准(Genai-Bench and Geneval)上进行了大规模的人类评估。结果表明,与Lamagen,Lumina-MGPT和扩散基线相比,令牌shuffle在大多数情况下显示出与文本提示,视觉缺陷减少以及更高的主观图像质量相比的改善。然而,相对于扩散模型,观察到逻辑一致性的微小降解,这表明了进一步完善的途径。

In terms of visual quality, Token-Shuffle demonstrated the capability to produce detailed and coherent 1024×1024 and 2048×2048 images. Ablation studies revealed that smaller shuffle window sizes (e.g., 2×2) offered the best trade-off between computational efficiency and output quality. Larger window sizes provided additional speedups but introduced minor losses in fine-grained detail.

在视觉质量方面,令牌shuffle证明了产生详细且相干的1024×1024和2048×2048图像的能力。消融研究表明,较小的洗牌窗口尺寸(例如2×2)在计算效率和产出质量之间提供了最佳的权衡。较大的窗户尺寸提供了额外的加速度,但以细粒度的细节引入了轻微的损失。

All in all, the researchers present a simple yet effective method to address the scalability limitations of autoregressive image generation. By merging spatially local visual tokens during the Transformer computation, their approach reduces the computational complexity without altering the fundamental next-token prediction step. This integration of spatial compression with standard AR generation is compatible with existing multimodal generation frameworks, enabling the efficient generation of high-resolution images. The experimental results highlight the potential of Token-Shuffle to push AR models beyond prior resolution limits, making high-fidelity, high-resolution generation more practical and accessible.

总而言之,研究人员提出了一种简单而有效的方法,可以解决自回归图像产生的可扩展性局限性。通过在变压器计算过程中合并空间局部视觉令牌,它们的方法可降低计算复杂性,而不会改变基本的下一步预测步骤。空间压缩与标准AR生成的这种集成与现有的多模式生成框架兼容,从而有效地生成了高分辨率图像。实验结果突显了令牌垫圈将AR模型推向先前分辨率限制的潜力,从而使高保真,高分辨率的生成更加实用和易于使用。

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

-

- SEI价格预测Q4 2025:SEI会达到新的高度吗?

- 2025-06-19 02:25:13

- SEI可以保持其上升势头吗?根据当前趋势和市场情绪分析第4季度2025年第4季度的SEI价格预测。

-

-

-

- 2025年的加密游戏:区块链游戏准备爆炸了吗?

- 2025-06-19 02:50:13

- 在2025年深入了解加密游戏的未来!探索游戏玩家和投资者的顶级区块链游戏,新兴趋势和关键见解。

-

-

-

- Coinbase的看涨势头:选项活动信号乐观

- 2025-06-19 02:55:13

- Coinbase挥舞着一阵看涨的情绪,并以积极的期权交易和战略性扩展为推动。这是可持续的势头吗?

-

- 比特币的平衡行为:导航地缘政治紧张局势达到眼睛记录高点

- 2025-06-19 00:25:12

- 随着地缘政治紧张局势加剧通货膨胀的担忧,比特币眼睛的新高点。机构积累,市场导航不确定性。