|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

探索機器學習中微型遞歸模型的趨勢,重點關注 TRM 及其對效率和性能的影響。

The world of machine learning is constantly evolving, with researchers always seeking ways to improve performance and efficiency. Lately, there's been buzz around 'Tiny model, recursive, machine learning' approaches. Let's dive into what's shaking in this field.

機器學習的世界在不斷發展,研究人員總是在尋找提高性能和效率的方法。最近,圍繞“微型模型、遞歸、機器學習”方法的討論越來越多。讓我們深入了解這個領域正在發生的變化。

The Rise of Tiny Recursive Models

小型遞歸模型的興起

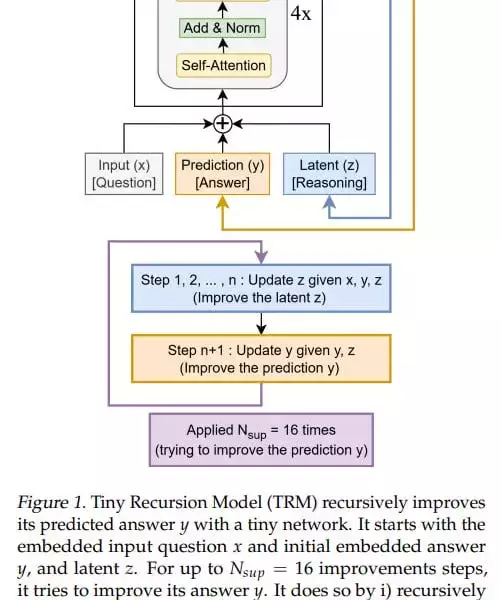

The recent work on TRM (Tiny Recursive Model) is questioning the necessity of complexity. TRM contains 5M-19M parameters, versus 27M in HRM. These models represent a fascinating shift towards simplicity and efficiency, challenging the conventional wisdom that bigger is always better.

最近關於 TRM(微型遞歸模型)的工作正在質疑複雜性的必要性。 TRM 包含 5M-19M 參數,而 HRM 包含 27M。這些模型代表了向簡單性和效率的令人著迷的轉變,挑戰了“越大越好”的傳統觀念。

TRM: A Closer Look

TRM:仔細觀察

TRM simplifies the recursive process, designed with one small network, which is essentially a standard transformer block: [self-attention, norm, MLP, norm]. The model is designed so that there’s one small network, which is essentially a standard transformer block: [self-attention, norm, MLP, norm]. In the original idea, there were 4 such blocks (but after experiments they came to 2).

TRM 簡化了遞歸過程,用一個小型網絡設計,本質上是一個標準的轉換器塊:[self-attention,norm,MLP,norm]。該模型的設計是為了有一個小型網絡,它本質上是一個標準的轉換器塊:[self-attention,norm,MLP,norm]。在最初的想法中,有 4 個這樣的塊(但經過實驗,他們變成了 2 個)。

At the input, it has three elements: input (x), latent (z), and prediction (y); they’re all summed into one value. The basic iteration, analogous to the L module in HRM, generates a latent value (z, also denoted in the recursion formula as z_L) at the layer output, and the updated z goes back to the module input, where it now adds to input (x) not as zero. The output-prediction (y, also denoted in the formula as z_H) is also added, but since it hasn’t been updated, it doesn’t change anything.

在輸入端,它具有三個元素:輸入(x)、潛在(z)和預測(y);它們都被匯總為一個值。基本迭代類似於 HRM 中的 L 模塊,在層輸出處生成一個潛在值(z,在遞歸公式中也表示為 z_L),更新後的 z 返回到模塊輸入,現在它添加到輸入 (x),而不是零。還添加了輸出預測(y,在公式中也表示為 z_H),但由於它尚未更新,因此不會改變任何內容。

Key Insights and Performance

主要見解和績效

TRM achieves higher numbers than HRM: 74.7%/87.4% (attention version/MLP version) versus 55% for Sudoku, 85.3% (attention version, MLP version gives 0) versus 74.5% for Maze, 44.6%/29.6% (attn/MLP) versus 40.3% for ARC-AGI-1 and 7.8%/2.4% (attn/MLP) versus 5.0% for ARC-AGI-2. The experiments don’t look very expensive; runtime from <24 hours to about three days maximum on 4*H100 according to the repo.

TRM 比 HRM 取得了更高的成績:數獨為 74.7%/87.4%(注意力版本/MLP 版本),數獨為 55%;迷宮為 85.3%(注意力版本,MLP 版本給出 0)為 74.5%;迷宮為 44.6%/29.6%(attn/MLP),ARC-AGI-1 為 40.3%;ARC-AGI-1 為 40.3%,7.8%/2.4% (attn/MLP) 對比 5.0% ARC-AGI-2。這些實驗看起來並不昂貴;根據存儲庫,4*H100 上的運行時間從 <24 小時到最多大約三天。

My Two Cents

我的兩分錢

While the theoretical underpinnings of why these recursions work so well might not be fully understood yet, the empirical results are hard to ignore. TRM's architectural inventiveness, as opposed to eternal model scaling, is a breath of fresh air. It would be interesting how it would be with dataset scaling.

雖然這些遞歸如此有效的理論基礎可能尚未完全理解,但實證結果卻很難忽視。與永恆的模型縮放相比,TRM 的架構創造力令人耳目一新。數據集縮放會如何會很有趣。

Looking Ahead

展望未來

The journey of 'Tiny model, recursive, machine learning' is just beginning. There's a lot more to explore. So, let's keep an eye on these tiny titans and see where they take us next. Good recursions to everyone!

“微小模型、遞歸、機器學習”的旅程才剛剛開始。還有很多值得探索的地方。因此,讓我們密切關注這些小巨人,看看它們下一步將帶我們去往何方。祝大家遞歸順利!

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

-

-

-

-

-

-

-

-

- 法夫學校校長因虐待男生腰帶而被定罪

- 2026-01-31 00:15:16

- 前校長亞歷山大·卡梅倫因使用皮帶和其他手段懲罰歐文斯通學校的小男孩而被判犯有襲擊罪。該案凸顯了歷史上的體罰做法。