Exploring the trend of tiny, recursive models in machine learning, focusing on TRM and its implications for efficiency and performance.

The world of machine learning is constantly evolving, with researchers always seeking ways to improve performance and efficiency. Lately, there's been buzz around 'Tiny model, recursive, machine learning' approaches. Let's dive into what's shaking in this field.

The Rise of Tiny Recursive Models

The recent work on TRM (Tiny Recursive Model) is questioning the necessity of complexity. TRM contains 5M-19M parameters, versus 27M in HRM. These models represent a fascinating shift towards simplicity and efficiency, challenging the conventional wisdom that bigger is always better.

TRM: A Closer Look

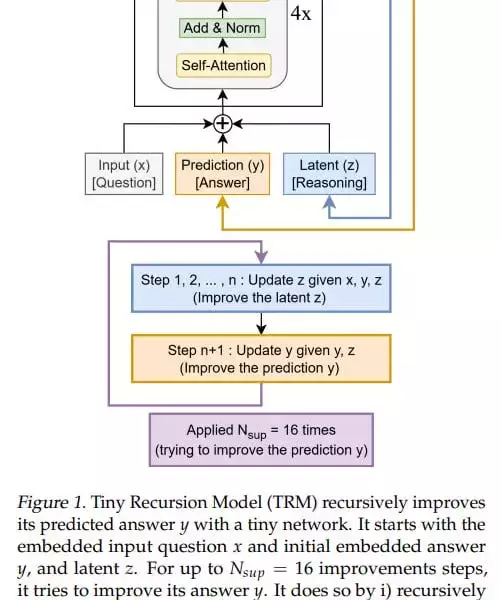

TRM simplifies the recursive process, designed with one small network, which is essentially a standard transformer block: [self-attention, norm, MLP, norm]. The model is designed so that there’s one small network, which is essentially a standard transformer block: [self-attention, norm, MLP, norm]. In the original idea, there were 4 such blocks (but after experiments they came to 2).

At the input, it has three elements: input (x), latent (z), and prediction (y); they’re all summed into one value. The basic iteration, analogous to the L module in HRM, generates a latent value (z, also denoted in the recursion formula as z_L) at the layer output, and the updated z goes back to the module input, where it now adds to input (x) not as zero. The output-prediction (y, also denoted in the formula as z_H) is also added, but since it hasn’t been updated, it doesn’t change anything.

Key Insights and Performance

TRM achieves higher numbers than HRM: 74.7%/87.4% (attention version/MLP version) versus 55% for Sudoku, 85.3% (attention version, MLP version gives 0) versus 74.5% for Maze, 44.6%/29.6% (attn/MLP) versus 40.3% for ARC-AGI-1 and 7.8%/2.4% (attn/MLP) versus 5.0% for ARC-AGI-2. The experiments don’t look very expensive; runtime from <24 hours to about three days maximum on 4*H100 according to the repo.

My Two Cents

While the theoretical underpinnings of why these recursions work so well might not be fully understood yet, the empirical results are hard to ignore. TRM's architectural inventiveness, as opposed to eternal model scaling, is a breath of fresh air. It would be interesting how it would be with dataset scaling.

Looking Ahead

The journey of 'Tiny model, recursive, machine learning' is just beginning. There's a lot more to explore. So, let's keep an eye on these tiny titans and see where they take us next. Good recursions to everyone!