|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

探索机器学习中微型递归模型的趋势,重点关注 TRM 及其对效率和性能的影响。

The world of machine learning is constantly evolving, with researchers always seeking ways to improve performance and efficiency. Lately, there's been buzz around 'Tiny model, recursive, machine learning' approaches. Let's dive into what's shaking in this field.

机器学习的世界在不断发展,研究人员总是在寻找提高性能和效率的方法。最近,围绕“微型模型、递归、机器学习”方法的讨论越来越多。让我们深入了解这个领域正在发生的变化。

The Rise of Tiny Recursive Models

小型递归模型的兴起

The recent work on TRM (Tiny Recursive Model) is questioning the necessity of complexity. TRM contains 5M-19M parameters, versus 27M in HRM. These models represent a fascinating shift towards simplicity and efficiency, challenging the conventional wisdom that bigger is always better.

最近关于 TRM(微型递归模型)的工作正在质疑复杂性的必要性。 TRM 包含 5M-19M 参数,而 HRM 包含 27M。这些模型代表了向简单性和效率的令人着迷的转变,挑战了“越大越好”的传统观念。

TRM: A Closer Look

TRM:仔细观察

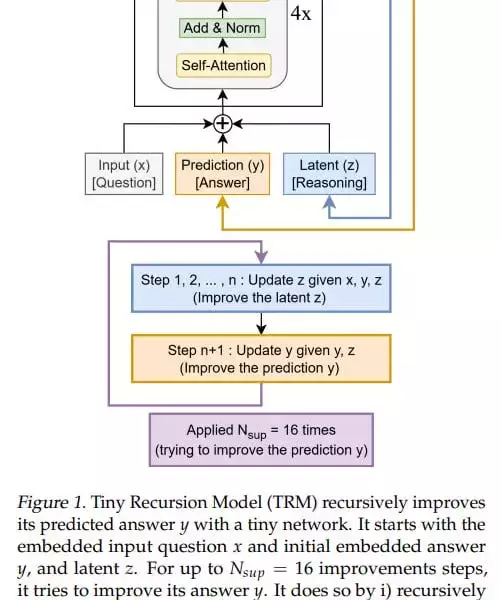

TRM simplifies the recursive process, designed with one small network, which is essentially a standard transformer block: [self-attention, norm, MLP, norm]. The model is designed so that there’s one small network, which is essentially a standard transformer block: [self-attention, norm, MLP, norm]. In the original idea, there were 4 such blocks (but after experiments they came to 2).

TRM 简化了递归过程,用一个小型网络设计,本质上是一个标准的转换器块:[self-attention,norm,MLP,norm]。该模型的设计是为了有一个小型网络,它本质上是一个标准的转换器块:[self-attention,norm,MLP,norm]。在最初的想法中,有 4 个这样的块(但经过实验,他们变成了 2 个)。

At the input, it has three elements: input (x), latent (z), and prediction (y); they’re all summed into one value. The basic iteration, analogous to the L module in HRM, generates a latent value (z, also denoted in the recursion formula as z_L) at the layer output, and the updated z goes back to the module input, where it now adds to input (x) not as zero. The output-prediction (y, also denoted in the formula as z_H) is also added, but since it hasn’t been updated, it doesn’t change anything.

在输入端,它具有三个元素:输入(x)、潜在(z)和预测(y);它们都被汇总为一个值。基本迭代类似于 HRM 中的 L 模块,在层输出处生成一个潜在值(z,在递归公式中也表示为 z_L),更新后的 z 返回到模块输入,现在它添加到输入 (x),而不是零。还添加了输出预测(y,在公式中也表示为 z_H),但由于它尚未更新,因此不会改变任何内容。

Key Insights and Performance

主要见解和绩效

TRM achieves higher numbers than HRM: 74.7%/87.4% (attention version/MLP version) versus 55% for Sudoku, 85.3% (attention version, MLP version gives 0) versus 74.5% for Maze, 44.6%/29.6% (attn/MLP) versus 40.3% for ARC-AGI-1 and 7.8%/2.4% (attn/MLP) versus 5.0% for ARC-AGI-2. The experiments don’t look very expensive; runtime from <24 hours to about three days maximum on 4*H100 according to the repo.

TRM 比 HRM 取得了更高的成绩:数独为 74.7%/87.4%(注意力版本/MLP 版本),数独为 55%;迷宫为 85.3%(注意力版本,MLP 版本给出 0)为 74.5%;迷宫为 44.6%/29.6%(attn/MLP),ARC-AGI-1 为 40.3%;ARC-AGI-1 为 40.3%,7.8%/2.4% (attn/MLP) 对比 5.0% ARC-AGI-2。这些实验看起来并不昂贵;根据存储库,4*H100 上的运行时间从 <24 小时到最多大约三天。

My Two Cents

我的两分钱

While the theoretical underpinnings of why these recursions work so well might not be fully understood yet, the empirical results are hard to ignore. TRM's architectural inventiveness, as opposed to eternal model scaling, is a breath of fresh air. It would be interesting how it would be with dataset scaling.

虽然这些递归如此有效的理论基础可能尚未完全理解,但实证结果却很难忽视。与永恒的模型缩放相比,TRM 的架构创造力令人耳目一新。数据集缩放会如何会很有趣。

Looking Ahead

展望未来

The journey of 'Tiny model, recursive, machine learning' is just beginning. There's a lot more to explore. So, let's keep an eye on these tiny titans and see where they take us next. Good recursions to everyone!

“微小模型、递归、机器学习”的旅程才刚刚开始。还有很多值得探索的地方。因此,让我们密切关注这些小巨人,看看它们下一步将带我们去往何方。祝大家递归顺利!

免责声明:info@kdj.com

所提供的信息并非交易建议。根据本文提供的信息进行的任何投资,kdj.com不承担任何责任。加密货币具有高波动性,强烈建议您深入研究后,谨慎投资!

如您认为本网站上使用的内容侵犯了您的版权,请立即联系我们(info@kdj.com),我们将及时删除。

-

- 加密货币的跨界时刻:空投、代币和 IPO 之路

- 2026-02-10 02:03:02

- 加密货币领域正充满变革性的变化。从战略性代币发行到雄心勃勃的 IPO 计划,该行业正在制定主流金融一体化的路线。

-

-

- 日本投币式停车场混乱六年后被捕

- 2026-02-10 01:30:46

- 一名男子在日本长期停放硬币而被捕,凸显了不同寻常的法律和实际挑战。阅读最新的。

-

-

- 银币投资:波动市场中的最佳选择

- 2026-02-10 00:26:31

- 探索银币作为投资的动态世界。发现在当今市场上做出最佳购买的关键见解和趋势。

-

-

-

-

- 加密货币发展进入新时代:对真正采用的追求加剧

- 2026-02-09 23:36:57

- 2026 年加密货币的发展将从炒作转向有形效用,重点关注强大的基础设施、监管合规性以及现实世界采用的无缝集成。