-

Bitcoin

Bitcoin $119600

0.72% -

Ethereum

Ethereum $4175

-0.54% -

XRP

XRP $3.207

0.44% -

Tether USDt

Tether USDt $0.9997

-0.03% -

BNB

BNB $795.8

-0.80% -

Solana

Solana $178.4

-0.74% -

USDC

USDC $0.9998

-0.01% -

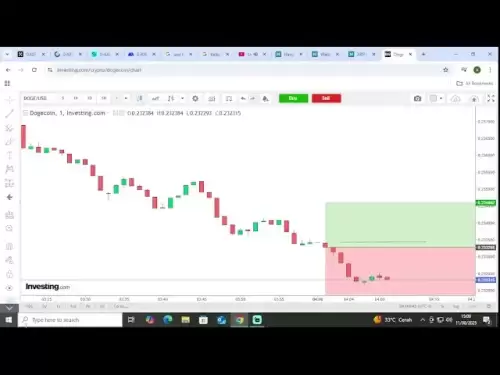

Dogecoin

Dogecoin $0.2273

-2.09% -

TRON

TRON $0.3405

-0.28% -

Cardano

Cardano $0.7864

-0.90% -

Hyperliquid

Hyperliquid $44.43

1.35% -

Chainlink

Chainlink $21.29

-0.96% -

Stellar

Stellar $0.4411

0.55% -

Sui

Sui $3.715

-2.92% -

Bitcoin Cash

Bitcoin Cash $583.0

2.23% -

Hedera

Hedera $0.2521

-2.12% -

Ethena USDe

Ethena USDe $1.000

-0.05% -

Avalanche

Avalanche $23.18

-1.96% -

Litecoin

Litecoin $125.0

2.79% -

Toncoin

Toncoin $3.311

-0.44% -

UNUS SED LEO

UNUS SED LEO $8.996

-0.53% -

Shiba Inu

Shiba Inu $0.00001305

-2.49% -

Uniswap

Uniswap $10.60

-0.11% -

Polkadot

Polkadot $3.910

-2.51% -

Dai

Dai $0.9999

-0.03% -

Cronos

Cronos $0.1640

2.00% -

Ethena

Ethena $0.7932

4.93% -

Bitget Token

Bitget Token $4.371

-1.10% -

Monero

Monero $267.2

-1.09% -

Pepe

Pepe $0.00001154

-3.46%

How to build a reinforcement learning trading environment?

In order to enhance trading outcomes, a reinforcement learning trading environment should encompass the definition of the trading environment and objectives, collection and preprocessing of historical market information, the design of trading agents and reward functions, the application of reinforcement learning algorithms for agent training, and the assessment and validation of agent performance.

Feb 22, 2025 at 11:30 am

Key Points

- Define the trading environment and objectives

- Collect and preprocess historical market data

- Design the trading agent and reward function

- Train the trading agent using reinforcement learning algorithms

- Test and evaluate the trading agent's performance

How to Build a Reinforcement Learning Trading Environment

1. Define the Trading Environment and Objectives

The first step is to define the trading environment and objectives. This includes:

- Trading horizon: The time frame for each trading decision, e.g., 5 minutes, 1 hour, or 1 day.

- Trading instruments: The financial assets that can be traded, e.g., stocks, forex, or cryptocurrencies.

- Market data: The historical and real-time data used to train and evaluate the trading agent.

- Trading rules: The constraints and limitations imposed on trading, e.g., trading fees, minimum order size, or market closure times.

- Performance metrics: The criteria used to measure the success of the trading agent, e.g., return on investment, Sharpe ratio, or maximum drawdown.

2. Collect and Preprocess Historical Market Data

Historical market data is essential for training and evaluating the trading agent. This data can be collected from sources such as:

- Data vendors: Companies like Bloomberg, Reuters, and FactSet provide comprehensive historical market data for various financial assets.

- Exchange APIs: Many exchanges offer REST or WebSocket APIs to access their historical trading data.

- Community databases: Open-source platforms like Quandl and Yahoo Finance host user-contributed historical market data.

Once collected, the data needs to be preprocessed to ensure its quality and consistency:

- Data cleaning: Remove duplicate, missing, or invalid data.

- Data transformation: Convert data into a format compatible with the trading agent, e.g., time series or feature vectors.

- Data normalization: Scale or standardize data to ensure it falls within a specific range.

3. Design the Trading Agent and Reward Function

The trading agent is the core component of the reinforcement learning environment. It takes observations of the market environment and makes trading decisions to maximize the specified performance metrics. The agent can be designed using various approaches:

- Rule-based: Agents that follow predefined rules and strategies for buying and selling.

- Technical analysis: Agents that use technical indicators and chart patterns to make trading decisions.

- Machine learning: Agents that are trained on historical data to predict price movements and make optimal trading choices.

The reward function is a critical component that guides the learning process of the trading agent. It defines the reward or penalty the agent receives for each action it takes. The reward function should be designed in a way that aligns with the trading objectives:

- Absolute return: Reward the agent for maximizing the total return on investment.

- Risk-adjusted return: Reward the agent for achieving higher returns while controlling risk.

- Sharpe ratio: Reward the agent for maximizing the Sharpe ratio, which measures risk-adjusted performance.

4. Train the Trading Agent Using Reinforcement Learning Algorithms

Reinforcement learning algorithms enable the trading agent to learn optimal trading strategies from experience. These algorithms interact with the trading environment, take actions, and adjust their behavior based on the rewards they receive.

- Value-based methods: Algorithms like Q-learning and SARSA estimate the value of each state-action pair and choose actions that maximize the expected value.

- Policy-based methods: Algorithms like REINFORCE and A2C directly estimate the trading policy, which defines the probability of taking each action in a given state.

- Deep reinforcement learning: Algorithms like deep Q-networks (DQNs) and policy gradients use neural networks to learn complex trading strategies from large datasets.

5. Test and Evaluate the Trading Agent's Performance

Once the trading agent is trained, its performance needs to be tested and evaluated:

- Historical backtesting: Run the agent on historical market data to assess its performance over different market conditions.

- Paper trading: Simulate live trading using real-time market data without risking actual capital.

- Live trading: Deploy the agent in a live trading environment with actual capital to test its real-world performance.

The evaluation process should involve monitoring the agent's performance metrics, identifying areas for improvement, and making necessary adjustments to the trading strategy or reward function.

FAQs

Q: What are the advantages of using reinforcement learning for trading?

A: Reinforcement learning allows trading agents to learn from experience, adapt to changing market conditions, and optimize their trading strategies without relying on predefined rules or human intervention.

Q: What are the types of trading agents that can be trained using reinforcement learning?

A: Reinforcement learning can be used to train various types of trading agents, including rule-based, technical analysis-based, and machine learning-based agents.

Q: How to select the right reward function for a reinforcement learning trading agent?

A: The reward function should align with the desired trading objectives. Common reward functions include absolute return, risk-adjusted return, and Sharpe ratio.

Q: How to evaluate the performance of a reinforcement learning trading agent?

A: Performance evaluation involves historical backtesting, paper trading, and live trading. Key performance metrics include return on investment, risk-adjusted return, and drawdown.

Q: What are the challenges of building a reinforcement learning trading environment?

A: The challenges include collecting high-quality market data, designing an effective reward function, selecting the right trading agent and reinforcement learning algorithm, and evaluating the agent's performance in a realistic trading environment.

Disclaimer:info@kdj.com

The information provided is not trading advice. kdj.com does not assume any responsibility for any investments made based on the information provided in this article. Cryptocurrencies are highly volatile and it is highly recommended that you invest with caution after thorough research!

If you believe that the content used on this website infringes your copyright, please contact us immediately (info@kdj.com) and we will delete it promptly.

- Superman Takes Flight: A Deep Dive into the Comic Program and Coin Medals

- 2025-08-11 20:30:12

- Shiba Inu's Comeback Trail and the Meme Coin Mania: Can $SHIB Deliver a 12,000x Return?

- 2025-08-11 18:30:11

- Proof of Trust, Transparency, and User Safety: Keeping Crypto Real

- 2025-08-11 18:50:12

- Pudgy Penguins, Bitcoin Penguins, and the $22M Meme Coin Mania: A New York Perspective

- 2025-08-11 17:10:11

- Bitcoin L2 Heats Up: SatLayer (SLAY) Lists on KuCoin Amidst Layer-2 Boom

- 2025-08-11 16:50:12

- Ethereum, Coin Market Cap, and Solfart Token: A Wild Ride in the Crypto Universe

- 2025-08-11 17:50:12

Related knowledge

Is it possible to adjust the leverage on an open position on KuCoin?

Aug 09,2025 at 08:21pm

Understanding Leverage in KuCoin Futures TradingLeverage in KuCoin Futures allows traders to amplify their exposure to price movements by borrowing fu...

What cryptocurrencies are supported as collateral on KuCoin Futures?

Aug 11,2025 at 04:21am

Overview of KuCoin Futures and Collateral MechanismKuCoin Futures is a derivatives trading platform that allows users to trade perpetual and delivery ...

What is the difference between realized and unrealized PNL on KuCoin?

Aug 09,2025 at 01:49am

Understanding Realized and Unrealized PNL on KuCoinWhen trading on KuCoin, especially in futures and perpetual contracts, understanding the distinctio...

How does KuCoin Futures compare against Binance Futures in terms of features?

Aug 09,2025 at 03:22am

Trading Interface and User ExperienceThe trading interface is a critical component when comparing KuCoin Futures and Binance Futures, as it directly i...

How do funding fees on KuCoin Futures affect my overall profit?

Aug 09,2025 at 08:22am

Understanding Funding Fees on KuCoin FuturesFunding fees on KuCoin Futures are periodic payments exchanged between long and short position holders to ...

What is the distinction between mark price and last price on KuCoin?

Aug 08,2025 at 01:58pm

Understanding the Basics of Price in Cryptocurrency TradingIn cryptocurrency exchanges like KuCoin, two key price indicators frequently appear on trad...

Is it possible to adjust the leverage on an open position on KuCoin?

Aug 09,2025 at 08:21pm

Understanding Leverage in KuCoin Futures TradingLeverage in KuCoin Futures allows traders to amplify their exposure to price movements by borrowing fu...

What cryptocurrencies are supported as collateral on KuCoin Futures?

Aug 11,2025 at 04:21am

Overview of KuCoin Futures and Collateral MechanismKuCoin Futures is a derivatives trading platform that allows users to trade perpetual and delivery ...

What is the difference between realized and unrealized PNL on KuCoin?

Aug 09,2025 at 01:49am

Understanding Realized and Unrealized PNL on KuCoinWhen trading on KuCoin, especially in futures and perpetual contracts, understanding the distinctio...

How does KuCoin Futures compare against Binance Futures in terms of features?

Aug 09,2025 at 03:22am

Trading Interface and User ExperienceThe trading interface is a critical component when comparing KuCoin Futures and Binance Futures, as it directly i...

How do funding fees on KuCoin Futures affect my overall profit?

Aug 09,2025 at 08:22am

Understanding Funding Fees on KuCoin FuturesFunding fees on KuCoin Futures are periodic payments exchanged between long and short position holders to ...

What is the distinction between mark price and last price on KuCoin?

Aug 08,2025 at 01:58pm

Understanding the Basics of Price in Cryptocurrency TradingIn cryptocurrency exchanges like KuCoin, two key price indicators frequently appear on trad...

See all articles