|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

已經開發了機器學習算法來處理許多不同的任務,從做出預測到匹配模式或生成匹配的圖像

Recent years have seen a massive increase in the capabilities of machine learning algorithms, which can now perform a wide range of tasks, from making predictions to matching patterns or generating images that match text prompts. To enable them to take on such diverse roles, these models have been given a broad spectrum of capabilities, but one thing they rarely are is efficient.

近年來,機器學習算法的功能大大提高,現在可以執行各種任務,從做出預測到匹配模式或生成匹配文本提示的圖像。為了使他們能夠扮演如此多樣化的角色,這些模型得到了廣泛的能力,但是很少有一件事是有效的。

In the present era of exponential growth in the field, rapid advancements often come at the expense of efficiency. It is faster, after all, to produce a very large kitchen-sink model filled with redundancies than it is to produce a lean, mean inferencing machine.

在當前該領域的指數增長時代,快速進步通常以犧牲效率為代價。畢竟,生產一個非常大的廚房清單模型畢業的速度要比生產精益,平均的推論機的速度要快。

But as these present algorithms continue to mature, more attention is being directed at slicing them down to smaller sizes. Even the most useful tools are of little value if they require such a large amount of computational resources that they are impractical for use in real-world applications. As you might expect, the more complex an algorithm is, the more challenging it is to shrink it down. That is what makes Hugging Face’s recent announcement so exciting — they have taken an axe to vision language models (VLMs), resulting in the release of new additions to the SmolVLM family — including SmolVLM-256M, the smallest VLM in the world.

但是,隨著這些當前的算法繼續成熟,更多的關注是將它們切成小尺寸。即使是最有用的工具,如果它們需要如此大量的計算資源,以至於它們不切實際地用於現實世界應用程序。如您所料,算法越複雜,將其縮小的挑戰就越具有挑戰性。這就是讓Hugging Face最近的公告如此令人興奮的原因 - 他們將斧頭帶到了視覺語言模型(VLM),從而釋放了Smolvlm家族的新增加 - 包括Smolvlm-256m,這是世界上最小的VLM。

SmolVLM-256M is an impressive example of optimization done right, with just 256 million parameters. Despite its small size, this model performs very well in tasks such as captioning, document-based question answering, and basic visual reasoning, outperforming older, much larger models like the Idefics 80B from just 17 months ago. The SmolVLM-500M model provides an additional performance boost, with 500 million parameters offering a middle ground between size and capability for those needing some extra headroom.

Smolvlm-256M是正確進行優化的一個令人印象深刻的例子,只有2.56億個參數。儘管尺寸很小,但該模型在字幕,基於文檔的問題回答和基本的視覺推理等任務中表現出色,比17個月前的IDEFICS 80B(例如IDEFICS 80B)的表現優於較舊的型號。 SMOLVLM-500M型號提供了額外的性能提升,5億參數為需要額外額外餘量的人提供了大小和能力之間的中間立場。

Hugging Face achieved these advancements by refining its approach to vision encoders and data mixtures. The new models adopt the SigLIP base patch-16/512 encoder, which, though smaller than its predecessor, processes images at a higher resolution. This choice aligns with recent trends seen in Apple and Google research, which emphasize higher resolution for improved visual understanding without drastically increasing parameter counts.

擁抱面孔通過完善其視覺編碼器和數據混合物的方法來實現這些進步。新模型採用Siglip Base Patch-16/512編碼器,儘管該編碼器比其前身小,但以更高的分辨率處理圖像。這種選擇與Apple和Google Research中的最新趨勢保持一致,這些趨勢強調了更高的分辨率,以改善視覺理解而不會大幅度增加參數計數。

The team also employed innovative tokenization methods to further streamline their models. By improving how sub-image separators are represented during tokenization, the models gained greater stability during training and achieved better quality outputs. For example, multi-token representations of image regions were replaced with single-token equivalents, enhancing both efficiency and accuracy.

該團隊還採用了創新的令牌化方法來進一步簡化其模型。通過改善在令牌化過程中如何表示子圖像分離器,模型在訓練過程中獲得了更大的穩定性,並獲得了更好的質量產出。例如,圖像區域的多to式表示被單詞等效物取代,從而提高了效率和準確性。

In another advance, the data mixture strategy was fine-tuned to emphasize document understanding and image captioning, while maintaining a balanced focus on essential areas like visual reasoning and chart comprehension. These refinements are reflected in the model’s improved benchmarks which show both the 250M and 500M models outperforming Idefics 80B in nearly every category.

在另一個進步中,對數據混合物策略進行了微調,以強調文檔的理解和圖像字幕,同時保持對視覺推理和圖表理解等基本領域的平衡關注。這些改進反映在模型的改進基準中,這些基準顯示了250m和500m模型在幾乎每個類別中的表現優於80B。

By demonstrating that small can indeed be mighty, these models pave the way for a future where advanced machine learning capabilities are both accessible and sustainable. If you want to help bring that future into being, go grab these models now. Hugging Face has open-sourced them, and with only modest hardware requirements, just about anyone can get in on the action.

通過證明小型確實可以是強大的,這些模型為未來的高級機器學習能力既可以訪問又可持續鋪平了道路。如果您想幫助將未來變成,請立即抓住這些模型。擁抱的臉是開源的,只有適度的硬件要求,幾乎任何人都可以採取行動。

免責聲明:info@kdj.com

所提供的資訊並非交易建議。 kDJ.com對任何基於本文提供的資訊進行的投資不承擔任何責任。加密貨幣波動性較大,建議您充分研究後謹慎投資!

如果您認為本網站使用的內容侵犯了您的版權,請立即聯絡我們(info@kdj.com),我們將及時刪除。

-

- Luna Crypto崩潰:從數十億美元失去到一個安靜的複出?

- 2025-07-04 02:35:18

- 探索戲劇性的露娜加密貨幣崩潰,目前的狀態,以及它是建立穩定的未來還是面臨另一個崩潰。

-

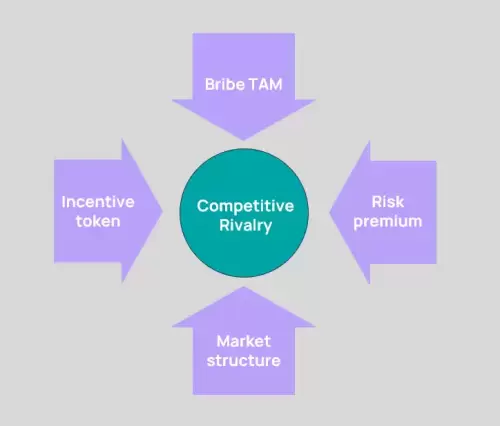

- 賄賂的四種力量:解碼加密動機的動力

- 2025-07-04 02:35:18

- 探索加密貨幣中的“四個動力,四個力量”,特別是在酒中,揭示了激勵措施的效率及其對代幣持有人的影響。讓我們潛入!

-

- Solana Defi積累:騎波浪還是只是在努力?

- 2025-07-04 02:40:12

- Solana Defi認為有趣的積累趨勢。從Memecoin的潮流到機構ETH的購買,我們深入介紹了加密貨幣的心臟。

-

-

- 比特幣的公牛運行:標準特許和ETF流入效應

- 2025-07-04 00:30:13

- 標準特許預測,到2025年,在ETF流入的推動下,比特幣將達到200,000美元。這對加密的未來意味著什麼?

-

- 比特幣,加密貨幣和市場情緒:騎看漲的浪潮?

- 2025-07-04 01:10:12

- 解碼比特幣,加密和市場情緒的最新趨勢。新的公牛在地平線上嗎?讓我們潛水。

-

-

- 在線市場:對於硬幣收藏家而言,更容易或更難?

- 2025-07-04 01:30:13

- 探索在線市場如何重塑硬幣收集,使訪問更加容易,但提出了諸如騙局和真實性問題之類的新挑戰。

-